Home Energy Steward

With the rapid rise of energy demands and electrification of vehicles, managing costs for a household will be a major chore. We are here to help and are excited to introduce you to the future of hands free energy management.

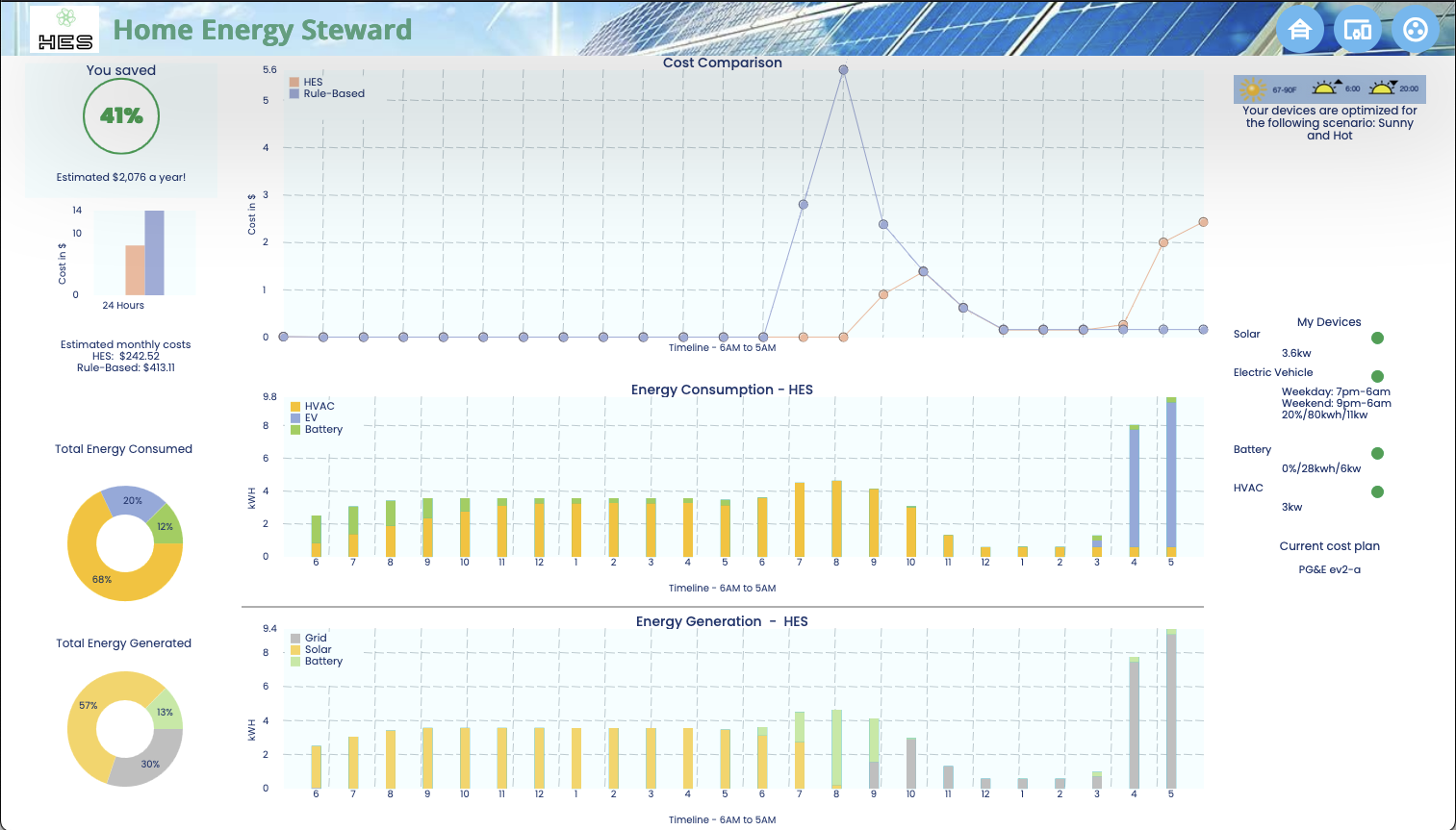

The Home Energy Steward (HES) is your Reinforcement Learning assistant for optimized electricity costs, effortless energy management and a greener planet, one household at a time. HES constantly manages power storage and usage in your household to minimize energy cost.

Visit Our Homepage here and Try the Home Energy Steward here.

Problem & Motivation

There are several reasons for high energy prices – higher costs of production, restricted pricing tiers, subsidies etc. Besides home users, EV charging stations and real estate developments could also benefit from optimized energy usage. The transition to EVs, mandate to phase out gas powered vehicles and the ever-increasing population is going to further strain the power grid – hence it is important to address this problem. A PGE exec we spoke with agrees that there are no good solutions yet.

Market research firm Technavio estimates, Home Energy Management systems market will grow at an annual rate of 8%. With trends of electrification and sustainability, we believe it is an emerging space with potential for large impact. From our research, the market is still fragmented and existing solutions focus on hardware integration, energy monitoring and rule-based optimization. Our product is differentiated by using reinforcement learning to learn optimal policies to control devices such as EVs and batteries. Compared to rule-based competition, our product is easy to use, intelligent and yields great cost savings.

The users of our product are households with Solar Panels, Batteries and EVs. Our super users are those with high electricity bills and who are environmentally conscious. Our go-to-market strategy is through businesses. This allows us to leverage companies’ existing relationships and channels to reach our end users at scale. Utilities would be interested in offering our technology because it supports grid stability by reducing peak energy consumption. Solar panel and battery vendors need to demonstrate savings, and our product yields significant savings over current approaches.

Data Science Approach

Reinforcement learning is a type of machine learning that involves an agent learning to make decisions in an environment in order to maximize a reward signal. To understand this, imagine a scenario where you're training a dog to perform a trick. You might give the dog a treat when it performs the trick correctly, and withhold the treat when it doesn't. Over time, the dog learns which behaviors lead to a treat and which don't, and it begins to perform the trick more consistently.

There have been several attempts to build models that optimize energy consumption. But in general, these solutions are built as rule engines which tend to be complex, harder to maintain and brittle to changes in infrastructure, types of energy consumption or human behavior. In contrast, reinforcement learning can adapt to different household specifications, weather patterns and equipment.

In the context of HES, its RL Agent -

- Interacts with devices which constitute the environment: Solar, Battery, EV and HVAC.

- Can observe the state of the environment: the solar, battery and grid capacity, whether a car is connected and the cost.

- Can take a set of possible actions such as charging or discharging the battery and charging the electric vehicle; this leads to the next state.

- Receive a reward in terms of negative cost.

The goal of the agent is to learn a set of actions that lead to the most reward, meaning the lowest cost over a period of a day.

To train the model, we expect sensor information from IoT devices in the household. For the MVP we replicate the sensor information to represents 80% of the households in California.

We use Proximal Policy Optimization (PPO) which is a popular algorithm in the field of reinforcement learning.

Introduction to HES

Home Energy Steward is a solution which integrates with a household's energy hub, constantly receives sensor information from various IOT devices in the house and dynamically manages power storage and usage to minimize cost.

The user can quickly and securely access their personal HES dashboard on their favorite device and review their cost and consumption in real time.

In future iterations, the steward will allow devices to be included or excluded from being managed, and also add much more fine grained control on how the user wants the steward to manage their devices.

Evaluation

How do we assert that the Home Energy Steward is learning..? We start with defining metrics of course. We treat this problem as a zero-sum-game of energy availability and demand. Any energy-demand that cannot be satisfied with solar-energy or the battery is drawn from the grid, and a cost is incurred.

The most obvious metric is this Energy Cost for the household. The other, more powerful metric is energy availability and demand in the household. We collect fine-grained data from the steward as it interacts with the environment to build metrics and evaluate the steward’s behavior. We also need a baseline to compare the steward against. We define that by asking ourselves - what usage pattern would represent 80% of our users. We then create baseline cost and consumption measures accordingly. We also rely on visualizing the steward’s actions to highlight unintuitive behavior which needs fixing.

Key Learnings & Impact

Reinforcement Learning and its application to the Energy domain are both critical aspects to realizing HES as a viable product. In the environment that was scoped for the MVP, controlling the behavior of all the constituent devices - EV, HVAC and Energy Storage is a fine act of balancing the reward policies applied to the RL agent. Caliberating the reward policies is an exercise in iterating over the model-training and evaluation and not only anticipating how each reward policy update will result in the agent behaving well in some situations, but also how it would regress in others and striking the right balance.

We also realized that the PPO algorithm, though simple to configure hyperparamaters for, is nevertheless extremely sensitive to the hyperparameter values. Consequently, each iteration for training needs a pre-caliberation to ensure the right hyperparameters are used. This was a major milestone for us to achieve clean and consistent learning.

Since applying RL to a problem is not as well structured as, for instance, a supervised-learning problem, we anticipated that our model evaluation would need bespoke metrics, evaluation data and visualization mechanisms. Focusing on these aspects early in the product development was critical to our success.

We believe that the problem of exponential growth of global energy demands and costs is a problem that cannot be solved by only focussing on the supply-side. Optimizing usage on the demand-side is essential to minimizing load on the grid. HES is designed to super charge this effort from the ground up.

Acknowledgements

We are grateful to Joyce Shen and Fred Nugen, for their invaluable inputs as our project advisors and mentors. We are also grateful to John-Peter (JP) Dolphin and David Biagioni for helping us with technical and industry insights.