“Don't Trip Over Your Trip”: A More Localized, Personalized Recommendation Engine for Travelers

Our Motivation

Finding the right restaurant, bar, or other amenities in an unfamiliar location can be stressful. Vacationers typically have one or two major attractions in mind that draws them to a location, and while these play an important anchoring role in vacation planning, travelers must plan significant time outside of these seminal experiences. The process of planning a vacation is often laborious and stressful, and using traditional search results - from Yelp, Google, Bing, and the like - you risk of missing truly incredible local experiences just off the well-worn path.

Our Solution

We aim to ensure vacationers can be confident they'll never miss a city's hidden gems. Our application frees up vacationers’ most valuable resource – time – and taps into the wisdom of locals who are similar to them to ensure every vacation is unforgettable. By utilizing data from locals, we can determine which reviewers are most like our vacationers, and deliver vacation recommendations as if our customers had local friends on the ground. Our solution:

- Identifies others with similar preferences to the user

- Prioritizes local knowledge over those of visitors

- Recommends relevant attractions to the user

Want to see our beta product in action - check out this demo video!

Our (Hypothetical) Business Model

Our Approaches

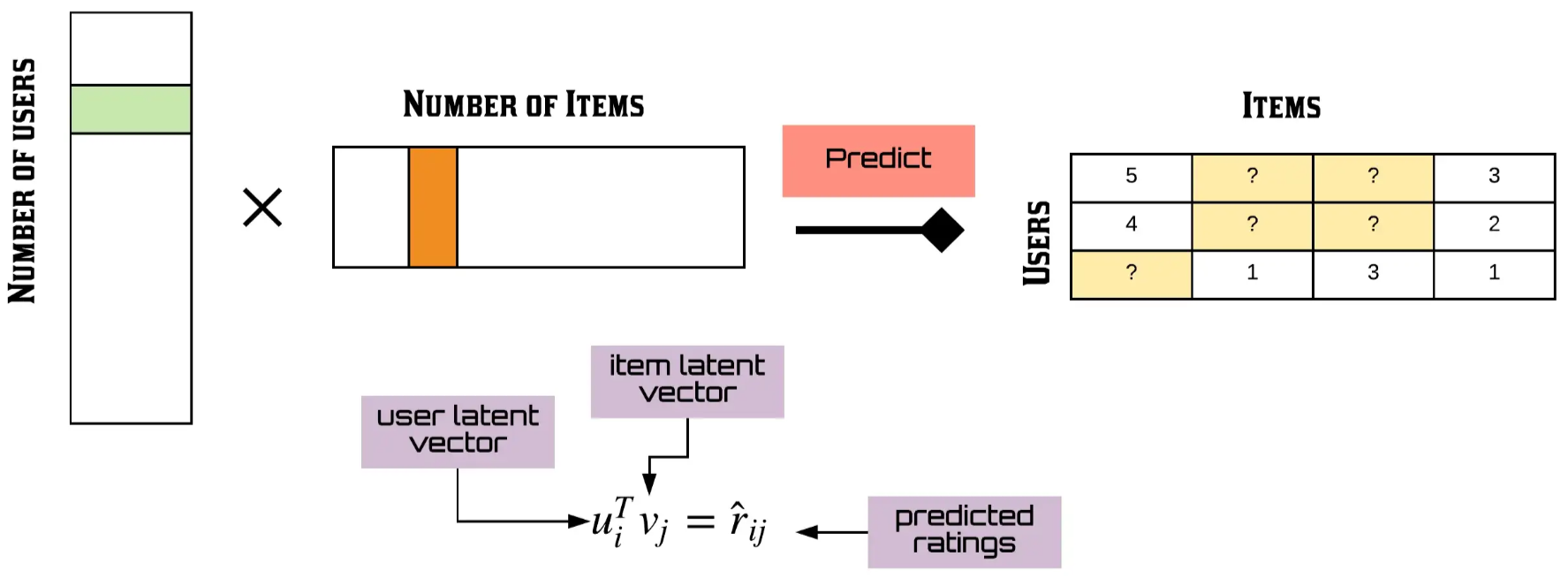

Matrix Factorization (image credit)

One of the original factorization machine models was proposed in 2010 by Rendle (read the original paper). This was a general predictor that works reliably with sparse data. A benefit is that it has linear complexity, and therefore is scalable to large datasets. Since 2010, it has typically been used to predict regression, binary classification, or ranking, although it is challenged by the "cold-start" problem: it has trouble making recommendations based on observations it hasn't seen before.

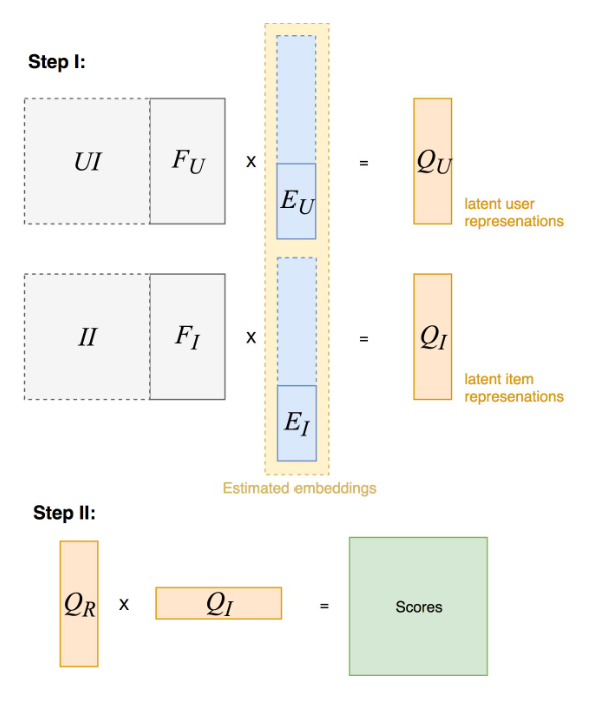

LightFM (image credit)

LightFM represents a hybrid model, originally proposed by Kula in 2015 (read the original paper). This approach works well for both cold-start (major improvement over original Matrix Factorization) and low-density recommendation systems. LightFM uses latent representation approach for embeddings, and represent items and users as linear combinations of content. It relies on collaborative filtering algorithm in case there are no features or the features aren't informative.

DeepFM (image credit)

DeepFM is an approach proposed by Guo et. al. in 2017 (read original paper) which combines both Neural Network architecture and a Factorization Machine component, allowing it to learn both low-order and high-order feature interactions automatically. This is a significant improvement given typical Factorization Machines only use pairwise feature interactions.

DCNv2 (image credit)

DCNv2, developed by Wang et. al. in 2020 (read original paper), leverages implicit high-order crosses learned from DNNs with explicit and bounded-degree feature crosses to be effective in linear models. The Cross Network component learns explicit feature interactions, while the Deep Network component learns complementary implicit interactions. This architecture is more efficient to be applied in production.

Our Features

Feature engineering can make all the difference in the world when working to create a more personalized recommendation engine. In an effort to beat baseline performance (simple LightFM deployment with no features - just user-item pairings), our team developed various features from the original data which we hypothesized would improve predictive power by utilizing additiona data about users, the attractions in the destination, and other metadata.

Users

- Average star-ratings given

- Average sentiment of the textual reviews given

- Localization score (i.e., experts on the area or visitors)

- Gender spectrum score

Businesses

- Average star-ratings received

- Average sentiment of the textual reviews received

- Attraction popularity

- Business location

Our Results

As is often (frustratingly!) the case, improving upon the state-of-the-art takes months or years. For many of the problems faced by data scientists today, progress is measured in metaphorical inches, not miles. In our case, we were able to achieve a marginal improvement in prediction over the baseline (as measured by AUC) a DCNv2 model with all of the features we engineered (listed above). For our (hypothetical) customers, this improvement translates into recommendations for attractions that more accurately reflect their preferences.

| Model | No Features | User Features | Business Features | All Features | All Features + Categorify |

| LightFM | 0.84* | 0.73 | 0.76 | 0.78 | 0.78 |

| DeepFM | 0.74 | 0.82 | 0.75 | 0.82 | 0.83 |

| DCNv2 | - | 0.82 | 0.75 | 0.85** | 0.85** |

*Baseline performance (model to beat)

**Best performing model